Recently, with the increase of large models of languages and AI, we have seen innumerable advances in the processing of natural language. Models in domains such as text, code and image/video generation have filed reasoning and performance as the human. These models work exceptionally well in general knowledge -based questions. Models such as GPT-4O, Llama 2, Claude and Gemini are trained in publicly available data sets. They do not answer specific questions of the domain or subject that can be more useful for various organizational tasks.

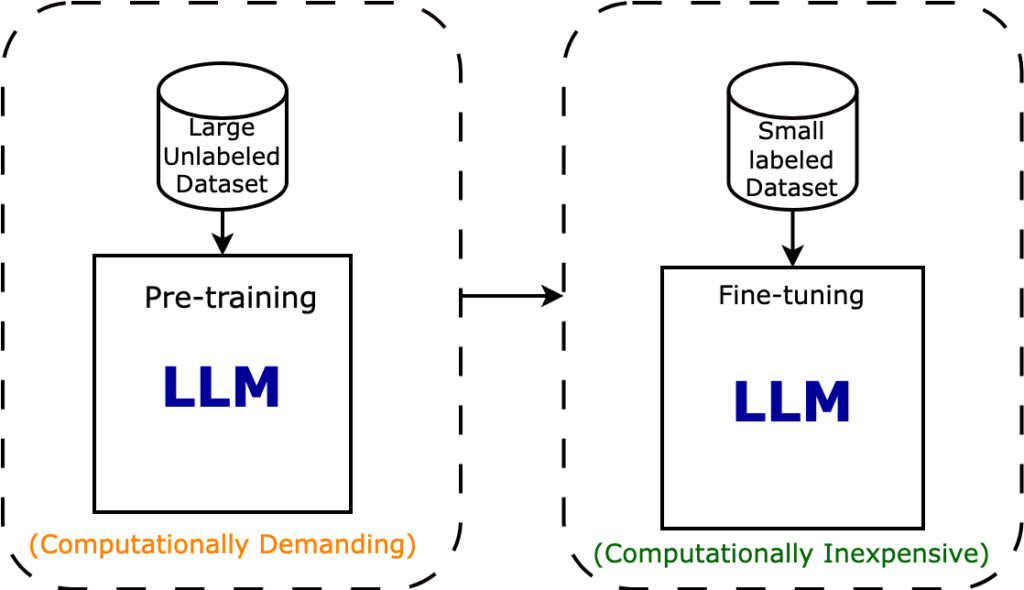

Fine adjustment helps developers and companies adapt and train pre-co-stroke models to a specific domain data set that files high precision and coherence in domain-related consultations. Fine agitation improves the performance of the model without needing extensive computer resources because the pre-co-stressed models have already learned the general text of the vast public data.

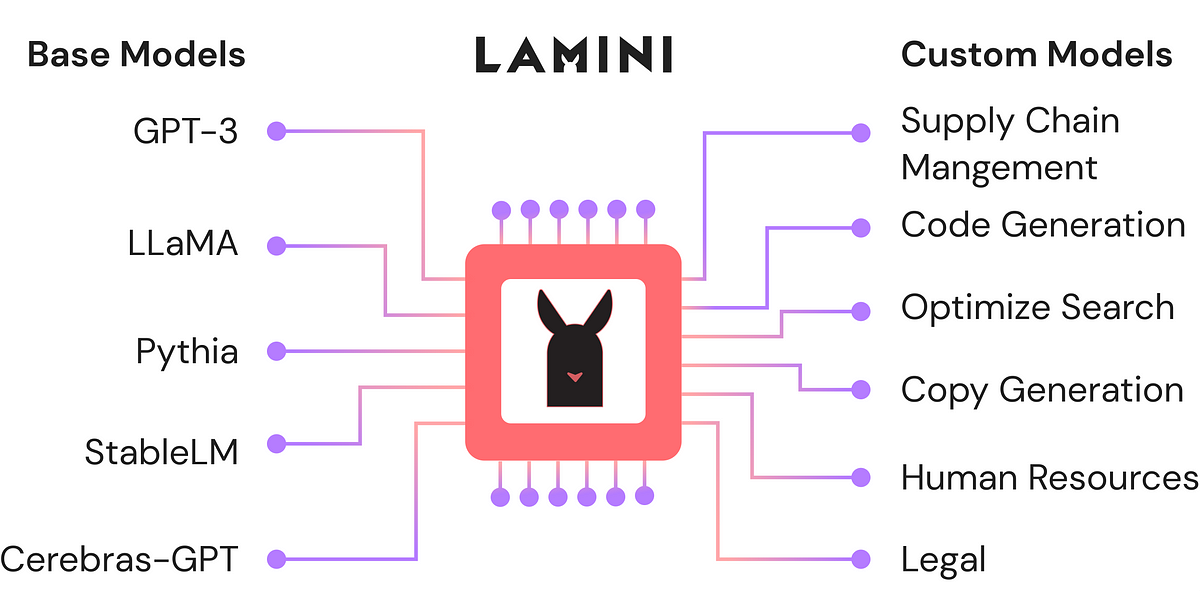

This blog will examine why we must adjust the pre-co-stressed models using the Lamini platform. This allows us to adjust and evaluate the models without using many computational resources.

So let’s get started!

Learning Objectives

- To explore the need to tune open source LLMs using Lamini

- To know the use of Lamini and under instructions on tight models

- To obtain a practical understanding of the end -to -end process of adjustment models.

This article has been published as part of the Data Science Blogathon.

Why should large language models adjust?

Pre-written models are mainly formed in vast general data with a high possibility of lack of context or specific knowledge of the domain. Pre-co-stressed models can also produce inaccurate and incoherent hallucinations and exits. The most popular language models based on chatbots such as chatgpt, gemini and bingchat have repeatedly demonstrated that pre-co-style models are prone to such inaccuracies. This is where the tuning reaches the rescue, which can help adapt the pre-co-lade LLM to specific tasks and questions of the subject. Other ways to align models to their goals include rapid engineering and few shots.

Still, the fine adjustment remains a better performance when it comes to performance metrics. Methods such as the fine adjustment parameter and the low adaptive adaptation further improving the fine adjustment model and helped developers generate better models. Let’s look at the fine adjustment in a context of great language model.

# Load the fine-tuning dataset

filename = "lamini_docs.json"

instruction_dataset_df = pd.read_json(filename, lines=True)

instruction_dataset_df

# Load it into a python's dictionary

examples = instruction_dataset_df.to_dict()

# prepare a samples for a fine-tuning

if "question" in examples and "answer" in examples:

text = examples["question"][0] + examples["answer"][0]

elif "instruction" in examples and "response" in examples:

text = examples["instruction"][0] + examples["response"][0]

elif "input" in examples and "output" in examples:

text = examples["input"][0] + examples["output"][0]

else:

text = examples["text"][0]

# Using a prompt template to create instruct tuned dataset for fine-tuning

prompt_template_qa = """### Question:

{question}

### Answer:

{answer}"""The above code shows that instruction tuning uses a fast model to prepare a data set for instruction tune and tune a model for a specific data set. We can adjust the pre-co-changing model to a specific use case using a custom data set.

The following section will examine how Lamini can help adjust the large language models (LLM) for custom data sets.

How to tune the open source LLM using Lamini?

The Lamini platform allows users to adjust and implement models without problems at no cost and hardware configuration requirements. Lamini It offers a stack from tip to develop, train, tune, to implement models of user comfort and model requirements. Lamini provides her own GPU computer network to train models effectively.

Lamini memory tuning tools and computing optimization help train and tune in high precision models while controlling costs. Models can be housed anywhere, in a private cloud or via Lamini GPU network. Then we will see a step -by -step guide to prepare data to adjust the big language models (LLM) using the Lamini platform.

Data preparation

Generally, we need to select a specific domain data set for data cleaning, promotion, tokenation and storage to prepare data for any fine adjustment task. After uploading the data set, we are prepared to convert it into an instruction -adjusted data set. We format each sample of the data set in a format of instruction, question and answer to better adjust it to our use cases. Check out the source of the data set using the given link Here. Let’s look at the instructions for the code example to adjust with tokenization for training using the Lamini platform.

import pandas as pd

# load the dataset and store it as an instruction dataset

filename = "lamini_docs.json"

instruction_dataset_df = pd.read_json(filename, lines=True)

examples = instruction_dataset_df.to_dict()

if "question" in examples and "answer" in examples:

text = examples["question"][0] + examples["answer"][0]

elif "instruction" in examples and "response" in examples:

text = examples["instruction"][0] + examples["response"][0]

elif "input" in examples and "output" in examples:

text = examples["input"][0] + examples["output"][0]

else:

text = examples["text"][0]

prompt_template = """### Question:

{question}

### Answer:"""

# Store fine-tuning examples as an instruction format

num_examples = len(examples["question"])

finetuning_dataset = []

for i in range(num_examples):

question = examples["question"][i]

answer = examples["answer"][i]

text_with_prompt_template = prompt_template.format(question=question)

finetuning_dataset.append({"question": text_with_prompt_template,

"answer": answer})In the example above, we format “Questions” and “answers” in a fast model and store them in a separate file for tokenization and poultry before training the LLM.

Tokenize the data set

# Tokenization of the dataset with padding and truncation

def tokenize_function(examples):

if "question" in examples and "answer" in examples:

text = examples["question"][0] + examples["answer"][0]

elif "input" in examples and "output" in examples:

text = examples["input"][0] + examples["output"][0]

else:

text = examples["text"][0]

# padding

tokenizer.pad_token = tokenizer.eos_token

tokenized_inputs = tokenizer(

text,

return_tensors="np",

padding=True,

)

max_length = min(

tokenized_inputs["input_ids"].shape[1],

2048

)

# truncation of the text

tokenizer.truncation_side = "left"

tokenized_inputs = tokenizer(

text,

return_tensors="np",

truncation=True,

max_length=max_length

)

return tokenized_inputsThe previous code takes samples of the data set as entry for the padded and token with tokenization to generate samples of preprocessing tokenized data set, which can be used for pre-written models. Now that the data set is ready, we will examine the training and evaluation of models using the Lamini platform.

Fine adjustment process

Now that we have a set of data set in an instruction adjustment format, we will upload the data set in the environment and adjust the pre-one LLM model using Lamini’s easy-to-use training techniques.

Setting up an environment

To start adjusting open source LLM using Lamini, we must first ensure that our code environment has suitable resources and libraries installed. We must make sure you have a suitable machine with sufficient GPU resources and install necessary libraries such as transformers, data sets, torches and pandas. You need to safely load environmental variables such as API_URL and API_Key, usually of environmental files. You can use packages like Dentenv to load these variables. After preparing the environment, load the data set and models for training.

Import Lamini Operating System Import Lamini.api_url = Os.getenv ("PowerMl__Production__URL") LAMINI.API_KEY = OS.GETENV ("PowerML__Production__Key") # Import Import Import Import Import Import Import Import Import Import Import IMP. yaml import time import of IMPORTED INTERMINESS IMPERATOR IMPERATOR IMPERT PANDS IMPORT PANDS AS PD IMPORT JSONLINES # Loading Transformers Architecture and [[

from utilities import *

from transformers import AutoTokenizer

from transformers import AutoModelForCausalLM

from transformers import TrainingArguments

from transformers import AutoModelForCausalLM

from llama import BasicModelRunner

logger = logging.getLogger(__name__)

global_config = NoneLoad Dataset

After setting up logging for monitoring and debugging, prepare your dataset using datasets or other data handling libraries like jsonlines and pandas. After loading the dataset, we will set up a tokenizer and model with training configurations for the training process.

# load the dataset from you local system or HF cloud

dataset_name = "lamini_docs.jsonl"

dataset_path = f"/content/{dataset_name}"

use_hf = False

# dataset path

dataset_path = "lamini/lamini_docs"Set up model, training config, and tokenizer

Next, we select the model for fine-tuning open-source LLMs Using Lamini, “EleutherAI/pythia-70m,” and define its configuration under training_config, specifying the pre-trained model name and dataset path. We initialize the AutoTokenizer with the model’s tokenizer and set padding to the end-of-sequence token. Then, we tokenize the data and split it into training and testing datasets using a custom function, tokenize_and_split_data. Finally, we instantiate the base model using AutoModelForCausalLM, enabling it to perform causal language modeling tasks. Also, the below code sets up compute requirements for our model fine-tuning process.

# model name

model_name = "EleutherAI/pythia-70m"

# training config

training_config = {

"model": {

"pretrained_name": model_name,

"max_length" : 2048

},

"datasets": {

"use_hf": use_hf,

"path": dataset_path

},

"verbose": True

}

# setting up auto tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name)

tokenizer.pad_token = tokenizer.eos_token

train_dataset, test_dataset = tokenize_and_split_data(training_config, tokenizer)

# set up a baseline model from lamini

base_model = Lamini(model_name)

# gpu parallization

device_count = torch.cuda.device_count()

if device_count > 0:

logger.debug("Select GPU device")

device = torch.device("cuda")

else:

logger.debug("Select CPU device")

device = torch.device("cpu")Setup Training to Fine-Tune, the Model

Finally, we set up training argument parameters with hyperparameters. It includes learning rate, epochs, batch size, output directory, eval steps, sav, warmup steps, evaluation and logging strategy, etc., to fine-tune the custom training dataset.

max_steps = 3

# trained model name

trained_model_name = f"lamini_docs_{max_steps}_steps"

output_dir = trained_model_name

training_args = TrainingArguments(

# Learning rate

learning_rate=1.0e-5,

# Number of training epochs

num_train_epochs=1,

# Max steps to train for (each step is a batch of data)

# Overrides num_train_epochs, if not -1

max_steps=max_steps,

# Batch size for training

per_device_train_batch_size=1,

# Directory to save model checkpoints

output_dir=output_dir,

# Other arguments

overwrite_output_dir=False, # Overwrite the content of the output directory

disable_tqdm=False, # Disable progress bars

eval_steps=120, # Number of update steps between two evaluations

save_steps=120, # After # steps model is saved

warmup_steps=1, # Number of warmup steps for learning rate scheduler

per_device_eval_batch_size=1, # Batch size for evaluation

evaluation_strategy="steps",

logging_strategy="steps",

logging_steps=1,

optim="adafactor",

gradient_accumulation_steps = 4,

gradient_checkpointing=False,

# Parameters for early stopping

load_best_model_at_end=True,

save_total_limit=1,

metric_for_best_model="eval_loss",

greater_is_better=False

)After setting the training arguments, the system calculates the model’s floating-point operations per second (FLOPs) based on the input size and gradient accumulation steps. Thus giving insight into the computational load. It also assesses memory usage, estimating the model’s footprint in gigabytes. Once these calculations are complete, a Trainer initializes the base model, FLOPs, total training steps, and the prepared datasets for training and evaluation. This setup optimizes the training process and enables resource utilization monitoring, critical for efficiently handling large-scale model fine-tuning. At the end of training, the fine-tuned model is ready for deployment on the cloud to serve users as an API.

# model parameters

model_flops = (

base_model.floating_point_ops(

{

"input_ids": torch.zeros(

(1, training_config["model"]["max_length"])}) * training_args.gradient_accumulation_steps) print (base_model) print ("memory footprint", base_model.get_memory_footprint () / 1e9, "GB") Printing ("Flops", Model_Flops / 1E9, "GFLOPS") Trainer Trainer = " Trainer (Model = Base_model, model_flops = model_flops, total_steps = max_steps, args = training_args, trein_dataset = trein_dataset, eval_daset = test_dataset,))Conclusion

In conclusion, this article offers an in -depth guide to understand the need to sharpen the LLM using the Lamini platform. It offers an overview of why we should tune the model for personalized data sets and business use cases and the benefits of using Lamini tools. We also saw a step -by -step guide to adjust the model using a custom and LLM data set with Lamini tools. Let’s summarize the critical committees of the blog.

Key TakeaWoys

- Learning is required for fine adjustment models against increased generation methods of engineering and recovery.

- Note of platforms like Lamini for hardware settings and easy -to -use implantation techniques for tight models to meet user requirements

- We are preparing data for the fine adjustment task and setting up a pipeline to train a base model using a wide range of hyperparameters.

Explore the code behind this article in Github.

The means shown in this article are not owned by Analytics Vidhya and are used at the discretion of the author.

Frequent questions

A. The fine adjustment process begins with the understanding of the specific context requirements, the preparation of the data set, the tokenization and the configuration of training configurations such as hardware requirements, training settings, and training arguments. Finally, training work is done for model development.

A. Adjust well an LLM means training a base model in a specific custom data set. This generates precise and relevant exits for the context for specific consultations by use of use.

A. Lamini offers an integrated model of fine language, inference and GPU configuration for transparent, efficient and profitable development of LLMS.